Sunday, March 21, 2010

Your AI Just Wants To Have Fun

Interactive entertainment (aka computer games) has become a dominant force in the entertainment sector of the global economy. The question that needs to be explored in depth: what is the role of artificial intelligence in the entertainment sector? If we accept the premise that artificial intelligence has a role in facilitating the entertainment and engagement of humans, then we are left with new questions...

Papers due March 29...

Tuesday, February 02, 2010

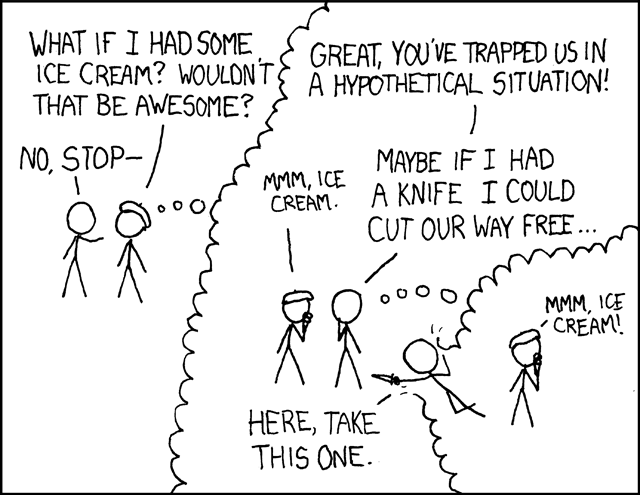

Recursion, XKCD Style

Ok, this is a good runner up for the best definition of recursion. Douglas Hofstadter would be proud.

I think I'm going to start collecting these.

-the Centaur

Comic from xkcd, used according to their "terms of service":

You are welcome to reprint occasional comics pretty much anywhere (presentations, papers, blogs with ads, etc). If you're not outright merchandizing, you're probably fine. Just be sure to attribute the comic to xkcd.com.So attributed.

Hm. Does the xkcd terms of service apply to the xkcd terms of service? Is that a bit like a post about recursion referring to itself? How meta.

Labels: Intelligence, Strange Loops

Comments:

Saturday, January 09, 2010

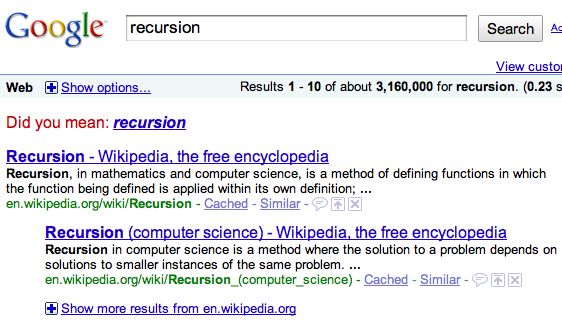

Best definition of recursion EVAH.

For those that don't get it, recursion in computer science refers to a process or definition that refers back to itself. For example, you could imagine "searching for your keys" in terms of searching everywhere in your house for your keys, which involves finding each room and searching everywhere in each room for your keys, which involves going into each room and looking for all the drawers and hiding places and looking everywhere in them for your keys ... and so on, until there's no smaller place to search.

So searching for [recursion] on Google involves Google suggesting that you look for [recursion]. Neat! And I'm pretty sure this is an Easter Egg and not just a bug ... it's persisted for a long time and is geeky enough for the company that encourages you to "Feel Lucky"!

-the Centaur

Labels: Intelligence, Strange Loops

Comments:

Tuesday, October 13, 2009

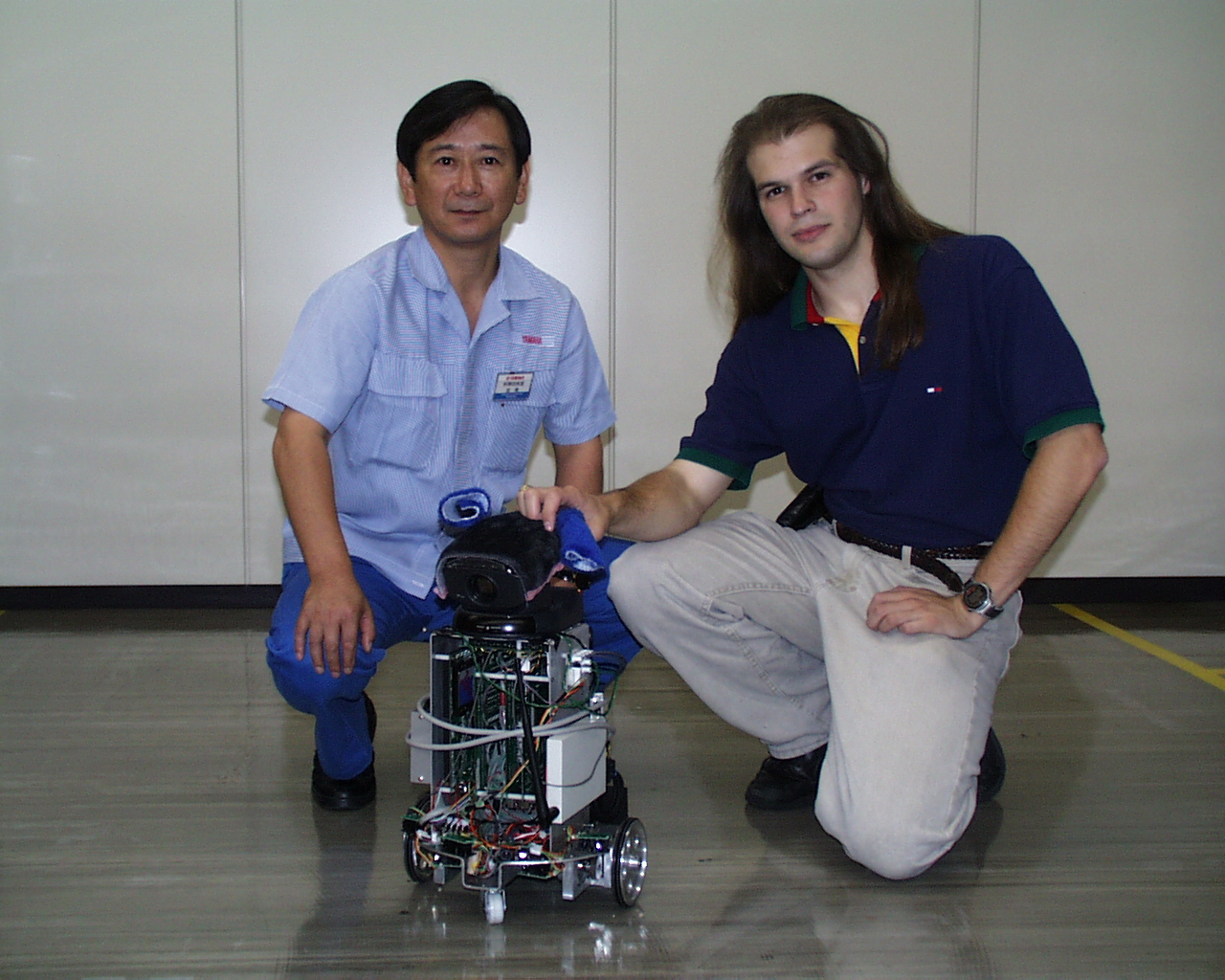

More Computer Hugs

| My colleague Ashwin Ram (pictured to the left, not above :-) has blogged about the "Emotional Memory and Adaptive Personalities" paper that he, Manish Mehta and I wrote. Go check it out on his research blog on interactive digital entertainment. It highlights the work his Cognitive Computing lab is doing to lay the underpinnings for a new generation of computer games based on intelligent computer interaction - both simulated intelligence and increased understanding of the player and his relationship with the environment. |

-the Centaur

P.S. The title of the post comes from my external blogpost on the paper, "Maybe your computer just needs a hug."

Labels: Intelligence

Comments:

Saturday, May 30, 2009

The Ogre Mark ... 0.1?

As a teenager I used to play OGRE and GEV, the quintessential microgames produced by Steve Jackson featuring cybernetic tanks called OGREs facing off with a variety of lesser tanks. For those that don't remember those "microgames", they were sold in small plastic bags or boxes, which contained a rulebook, map, and a set of perforated cardboard pieces used to play the game. After playing a lot, we extended OGRE by creating our own units and pieces from cut up paper; the lead miniature you see in the pictures came much later, and was not part of the original game.

In OGRE's purest form, however, one OGRE, a mammoth cybernetic vehicle, faced off with a dozen or so more other tanks firing tactical nuclear weapons ... and thanks to incredible firepower and meters of lightweight BCP armor, it would just about be an even fight. Below you see a GEV (Ground Effect Vehicle) about to have a very bad day.

OGREs were based (in part) on the intelligent tanks from Keith Laumer's Bolo series, but there was also an OGRE timeline that detailed the development of the armament and weapons that made tank battles make sense in the 21st century. So there was a special thrill playing OGRE: I got to relive my favorite Keith Laumer story, in which one decommissioned, radioactive OGRE is accidentally reawakened and digs its way out of its concrete tomb to continue the fight. (The touching redemption scene in which the tank is convinced not to lay waste to the countryside by its former commander were, sadly, left out of the game mechanics of Steve Jackson's initial design).

But how realistic are tales of cybernetic tanks? AI is famous for overpromising and underdelivering: it's well nigh on 2010, and we don't have HAL 9000, much less the Terminator. But OGRE, being a boardgame, did not need to satisfy the desires of filmmakers to present a near-future people could relate to; so it did not compress the timeline to the point of unbelievability. According to the Steve Jackson OGRE chronology the OGRE Mark I was supposed to come out in 2060. And from what I can see, that date is a little pessimistic. Take a look at this video from General Dynamics:

It even has the distinctive OGRE high turret in the form of an automated XM307 machine gun. Scary! Admittedly, the XUV is a remote controlled vehicle and not a completely automated battle tank capable of deciding our fate in a millisecond. But that's not far in coming... General Dynamics is working on autonomous vehicle navigation, and they're not alone. Take a look at Stanley driving itself to the win of the Darpa Grand Challenge:

Now, that's more like it! Soon, I will be able to relive the boardgames my youth in real life ... running from an automated tank ... hell-bent on destroying the entire countryside ...

Hm.

Somehow, that doesn't sound so appealing. I have an idea! Instead of building killer death-bots, why don't we try building some of these instead (full disclosure: I've worked in robotic pet research):

Oh, wait. The AIBO program was canceled ... as was the XM307. Stupid economics. It's supposed to be John Connor saving us from the robot apocalypse, not Paul Krugman and Greg Mankiw.

-the Centaur

Pictured: Various shots of OGRE T-shirt, book, rules, pieces, and miniatures, along with the re-released version of the OGRE and GEV games. Videos courtesy Youtube.

Labels: Intelligence, Singularity Studies, Sith Park, Superior Firepower

Comments:

Monday, May 18, 2009

More on why your computer needs a hug

Thanks to the permission of IGI, the publisher of the Handbook of Synthetic Emotions and Sociable Robotics, the full text of "Emotional Memory and Adaptive Personalities" is now available online. I've blogged about this paper previously here and elsewhere, but now that I've got permission, here's the full abstract:

Emotional Memory and Adaptive Personalities

by Anthony Francis, Manish Mehta and Ashwin Ram

Believable agents designed for long-term interaction with human users need to adapt to them in a way which appears emotionally plausible while maintaining a consistent personality. For short-term interactions in restricted environments, scripting and state machine techniques can create agents with emotion and personality, but these methods are labor intensive, hard to extend, and brittle in new environments. Fortunately, research in memory, emotion and personality in humans and animals points to a solution to this problem. Emotions focus an animal’s attention on things it needs to care about, and strong emotions trigger enhanced formation of memory, enabling the animal to adapt its emotional response to the objects and situations in its environment. In humans this process becomes reflective: emotional stress or frustration can trigger re-evaluating past behavior with respect to personal standards, which in turn can lead to setting new strategies or goals. To aid the authoring of adaptive agents, we present an artificial intelligence model inspired by these psychological results in which an emotion model triggers case-based emotional preference learning and behavioral adaptation guided by personality models. Our tests of this model on robot pets and embodied characters show that emotional adaptation can extend the range and increase the behavioral sophistication of an agent without the need for authoring additional hand-crafted behaviors.

And so this article is self-contained, here's the tired old description of the paper I've used a few times now:

"Emotional Memory and Adaptive Personalities" reports work on emotional agents supervised by my old professor Ashwin Ram at the Cognitive Computing Lab. He's been working on emotional robotics for over a decade, and it was in his lab that I developed my conviction that emotions serve a functional role in agents, and that to develop an emotional agent you should not start with trying to fake the desired behavior, but instead by analyzing psychological models of emotion and then using those findings to design models for agent control that will produce that behavior "naturally". This paper explains that approach and provides two examples of it in practice: the first was work done by myself on agents that learn from emotional events, and the second was work by Manish Mehta on making the personalities of more agents stay stable even after learning.

-the Centaur

Pictured is R1D1, one of the robot testbeds described in the article.

Labels: Intelligence

Comments:

Saturday, January 24, 2009

Maybe your computer just needs a hug

"Emotional Memory and Adaptive Personalities" reports work on emotional agents supervised by my old professor Ashwin Ram at the Cognitive Computing Lab. He's been working on emotional robotics for over a decade, and it was in his lab that I developed my conviction that emotions serve a functional role in agents, and that to develop an emotional agent you should not start with trying to fake the desired behavior, but instead by analyzing psychological models of emotion and then using those findings to design models for agent control that will produce that behavior "naturally". This paper explains that approach and provides two examples of it in practice: the first was work done by myself on agents that learn from emotional events, and the second was work by Manish Mehta on making the personalities of more agents stay stable even after learning.Thanks to the good graces of the search engine that starts with a G, I've discussed the article in a bit more depth on their blog in a post titled Maybe your computer just needs a hug.

Please go check it out!

-the Centaur

Labels: Intelligence

Comments:

Sunday, October 26, 2008

Emotional Memory and Adaptive Personalities

So a couple days ago I sent off the final draft of a paper on Emotional Memory and Adaptive Personalities to the Handbook of Synthetic Emotions and Sociable Robotics. Tonight I heard back from the publisher: they have everything that they need, and our paper should be published as part of the Handbook ... in early 2010.

"Emotional Memory and Adaptive Personalities" reports work on emotional agents supervised by my old professor Ashwin Ram at the Cognitive Computing Lab. He's been working on emotional robotics for over a decade, and it was in his lab that I developed my conviction that emotions serve a functional role in agents, and that to develop an emotional agent you should not start with trying to fake the desired behavior, but instead by analyzing psychological models of emotion and then using those findings to design models for agent control that will produce that behavior "naturally". This paper explains that approach and provides two examples of it in practice: the first was work done by myself on agents that learn from emotional events, and the second was work by Manish Mehta on making the personalities of more agents stay stable even after learning.

The takehome, however, is that this is the first scientific paper that I've gotten published in the past seven years, and one of the few where I was actually the lead author (though certainly the paper wouldn't have happened without the hard work and guidance of my coauthors). Here's hoping this is the first of many more!

-the Centaur

Labels: Intelligence

Comments:

Thursday, May 29, 2008

Aftermath...

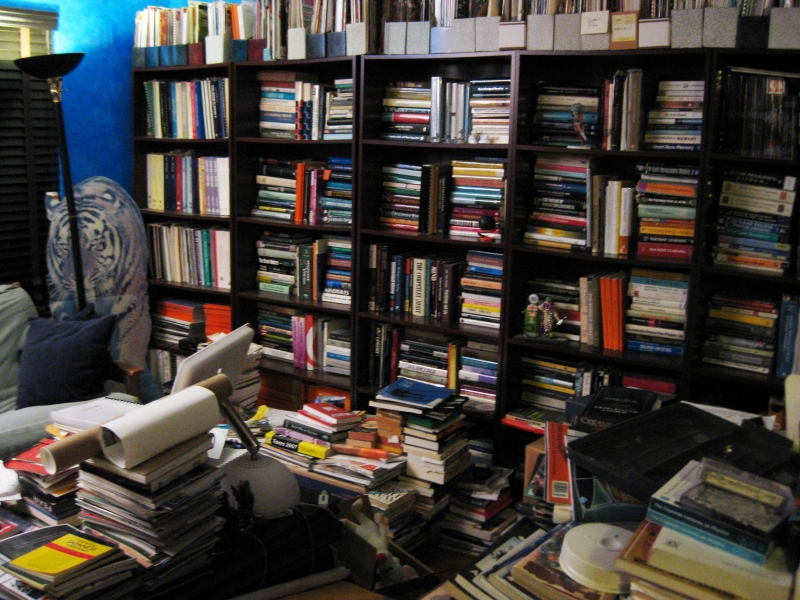

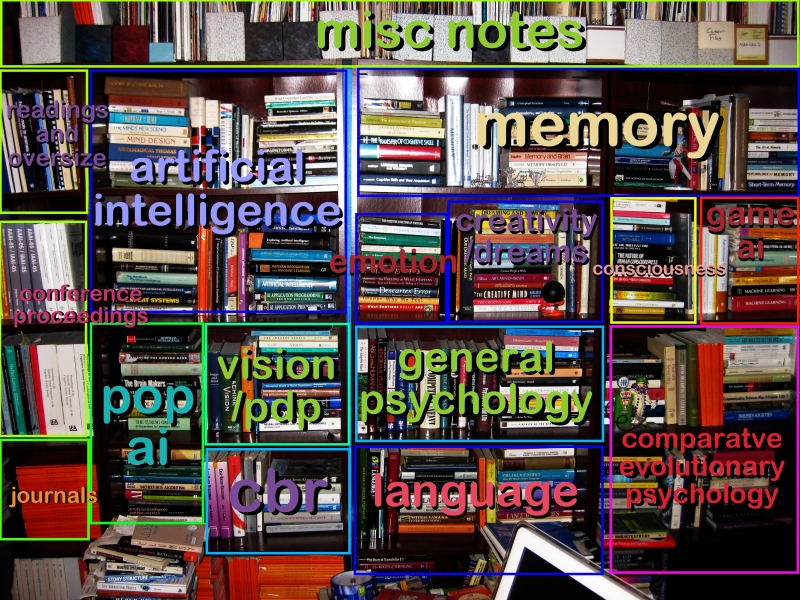

One consequence of finishing a paper is that there's a bit of debris left over...

Fortunately, now that my library is more organized, it's easier to reshelve:

No, seriously! Take a look:

I wuv my library. It feeds my ego. Or do I mean my head? Or both...

-the Centaur

Labels: Intelligence, We Call It Living

Comments:

That's just not human. And I mean that in the best possible way.

Congrats on another paper finished. Post a Comment

Wednesday, May 21, 2008

Unsatisfied...

So on this last paper ... I spent a year and a half working on the project, six intensive weeks implementing our software doing a crash-course implementation on a testing platform only available for a short time, put the project on hold for a bit during that whole dot-com dancing, and then spent many evenings over the last six months ... and most of the evenings over the last month ... putting together a 10,000 word paper.

End result? I'm unsatisfied. I feel like I and my colleagues busted our balls to get this done, and I'm satisfied with the text of the paper qua being a paper ... but scientifically, I think we'd need to put out another 50% more effort to get it up to my standards of what's really "good". We needed to do many more evaluations (not that we could, as we lost our testbed) but even given that I think the whole paper needed to be more rigorous, more carefully thought out, more in depth.

It's like I had to work my ass off just to get it to the point where I could really see how far I had to go.

Depressing.

-the Centaur

Labels: Intelligence

Comments:

Wheeew....

....finished with our chapter submission to the Handbook of Research on Synthetic Emotions and Sociable Robotics. The book won't come out for another year and a half, AFAIK, but the due date for chapters was yesterday. Breaking my normal tradition, I'm not going to put up the abstract right now as the chapter is in for blind peer review. For the past month this has been ... well, you don't want to hear me whine. But trying to put out a scientific paper at the same time as blogging every day is ... bleah. Obviously, the paper had to win.

Now, back on track.

Labels: Intelligence

Comments:

Friday, May 16, 2008

Two great papers on experimental design by Norvig

Warning Signs in Experimental Design and Interpretation:When an experimental study states "The group with treatment X had significantly less disease (p = 1%)", many people interpret this statement as being equivalent to "there is a 99% chance that treatment X prevents disease." This essay explains why these statements are not equivalent. For such an experiment, all of the following are possible:

There is no way to know for sure which possibility holds, but there are warning signs that can dilute the credibility of an experiment.

- X is in fact an effective treatment as claimed.

- X is only effective for some people, in some conditions, in a way that the experiment failed to test.

- X is ineffective, and only looked effective due to random chance.

- X is ineffective because of a systematic flaw in the experiment.

- X is ineffective and the experimenters and/or reader misinterpreted the results to say that it is.

The companion paper:

Evaluating Extraordinary Claims: Mind Over Matter? Or Mind Over Mind?The only thing that I quibble with is the term "extraordinary" in the title of the second article. In my experience, "extraordinary" is a word people use to signal that something has challenged one of their beliefs and they're going to run it over the coals, which Norvig does with the efficacy of intercessory prayer in his article (in a very balanced and fair way I think). However, part of the point of Norvig's very evenhanded essay is that these kinds of problems can happen to you on things that you do believe:

A relative of mine recently went in for minor surgery and sent out an email that asked for supportive thoughts during the operation and thoughtfully noted that since the operation was early in the morning when I might be sleeping, thatIt doesn't matter, according to Larry Dossey, M.D. in Healing Words, whether you remember to do it at the appropriate time or do it early or later. He says the action of mentally projected thought or prayer is "non-local," i.e. not dependent on distance or time, citing some 30+ experiments on human and non-human targets (including yeast and even atoms), in which recorded results showed changes from average or random to beyond-average or patterned even when the designated thought group acted after the experiment was over.

I was perplexed. On the one hand, if there really was good evidence of mind-over-matter (and operating backwards in time, no less) you'd think it would be the kind of thing that would make the news, and I would have heard about it. On the other hand, if there is no such evidence, why would seemingly sensible people like Larry Dossey, M.D. believe there was? I had a vague idea that there were some studies showing an effect of prayer and some showing no effect; I thought it would be interesting to research the field. I was concurrently working on an essay on experiment design, and this could serve as a good set of examples.

After reading Tavris and Aronson's book Mistakes Were Made (but not by me), I understand how. Dossey has staked out a position in support of efficacious prayer and mind-over-matter, and has invested a lot of his time and energy in that position. He has gotten to the point where any challenge to his position would bring cognitive dissonance: if his position is wrong, then he is not a smart and wise person; he believes he is smart and wise; therefore his position must be correct and any evidence against it must be ignored. This pattern of self-justification (and self-deception), Tavris and Aronson point out, is common in politics and policy (as well as private life), and it looks like Dossey has a bad case. Ironically, Dossey is able to recognize this condition in other people -- he has a powerful essay that criticizes George W. Bush for saying "We do not torture" when confronted with overwhelming evidence that in fact Bush's policy is to torture. I applaud this essay, and I agree that Bush has slipped into self-deception to justify himself and ward off cognitive dissonance. Just like Dossey. Dossey may have a keen mind, but his mind has turned against itself, not allowing him to see what he doesn't want to see. This is a case of mind over mind, not mind over matter.So, at least as working scientists are concerned, I would suggest Norvig's second essay should be retitled "Evaluating Claims."

Or put another way, with all due respect to Carl Sagan, I think "extraordinary claims require extraordinary evidence" is a terrible way to think for a scientist: it prompts you to go around challenging all the things you disagree with. In contrast, I think claims require evidence, and for a scientist you must start at home with the things you're most convinced of, because you're least likely to see your own claims as extraordinary.

This is the most true, of course, for papers you're trying to get published. Time to review my results and conclusions sections...

-Anthony

Labels: Intelligence, Thank You Carl Sagan

Comments:

Wednesday, May 14, 2008

Pleasure and Pain, Fiction and Science

But papers are hybrid beasts: they report data and argue about what we can conclude from it. Since I write papers by core dumping my data then refining the argument, what I'm subjecting myself to when I edit my paper is a poorly argued jumble based on a quasi-random collection of facts. It's not all bad - I do work from an outline and plan - but an outline is not an argument.

This hit home to me recently when I was working on a paper on some until-now unreported work on robot pets I did about ten years ago. Early drafts of the paper had a solid abstract and extensive outline from our paper proposal, and into this outline I poured a number of technical reports and partially finished papers. The result? Virtual migraine!

But after I got about 90% of the paper done, I had a brainflash about a better abstract, which in turn suggested a new outline. My colleagues agreed, so I replaced the abstract and reorganized the paper. Now the paper was organized around our core argument, rather than around the subject areas we were reporting on, which involved lots of reshuffling but little rewriting.

The result? Full of win. The paper's not done, not by a long shot, but the first half reads much more smoothly, and, more importantly, I can clearly look at all the later sections and decide what parts of the paper need to stay, what parts need to go, and what parts need to be moved and/or merged with other sections. There are a few weak spots, but I'm betting if I take the time to sit down and think about our argument and let that drive the paper that I will be able to clean it up right quick.

Hopefully this will help, going forward. Here goes...

-the Centaur

Labels: Dragon Writers, Intelligence

Comments:

Saturday, May 10, 2008

Worthy of human treatment ...

Once as a child I asked a Jesuit whether dolphins should be treated like people if they turned out to be intelligent. I think I phrased it in terms of the question "whether they had souls", but regardless the Jesuit's answer was immediate and clear: yes, they have souls (and, by implication, should be treated like people) if they had two things - intellect and will. Years later I read enough Aquinas to understand what he meant. But when I tried to regurgitate my understanding of these concepts for this essay, as partially digested by my thinking on artificial intelligence, I found that what came up were new concepts, and that I no longer cared what Aquinas thought, other than to give him due credit for inspiring my ideas.

So, in my view, the two properties that a sentient being needs to be treated with respect due to other sentients are:

- Intellect: the ability to understand the world in terms of a universal system of conceptual structures

- Will: the ability to select a conceptual description of a desired behavior and to regulate one's behavior to match it

In this view, part of the reason that we treat animals like animals is that their intellects are weak and as a consequence their wills almost nonexistent. While animals can learn basic concepts and do basic reasoning tasks, it's extraordinarily difficult for them to put what they can learn into larger structures that describe their world - for example, it takes years of intensive training for chimps to learn the basic language competencies a human child gets in eighteen to twenty four months. Without the ability to put together "universal" structures that describe behavior, your cat can't describe behaviors much more sophisticated than "I'm not allowed in the art studio" and hence is vulnerable to all sorts of hazards and prone to all sorts of misbehavior because they simply can't understand that, for example, it's not a good idea to go out after 2am since their owners won't be awake to let them in.

Similarly, children are wards of their parents because they haven't yet learned the conceptual structures of what they should do, and lack the self-regulation to guide themselves to follow what they have learned. Violent criminals become wards of the state for the same reason - either they didn't realize that it was a bad idea to hurt their fellow man, or more likely didn't bother to regulate themselves to achieve it. A similar problem occurs for a variety of neurodiverse people who, for one reason or other, are not able to regulate their behaviors well enough to manage their lives without the assistance of a caregiver (though having various kinds of self-regulatory dysfunctions is not necessarily a sign that someone does not have a sophisticated intellect, and there are a number of autistic people who would argue that we are too quick to discriminate; but I digress).

Regardless, so intellect and will are ideas that bump around in my head a lot. Can something understand the world it's in in abstract terms, and figure out its relationship to it? And given that understanding, can it decide what kind of life it should lead, and can it then actually follow that life? Anything that can do that gets a free pass towards being treated with respect - if you have those fundamental capabilities I'm inclined to treat you like a fellow sentient until and unless you prove me wrong.

We may seem to have gotten pretty far from souls here. But for the Christians in the audience, think about intellect and will for a moment. Something that had intellect could learn who Jesus was, and something with will could decide whether or not to follow him. And it wouldn't matter whether that was a neurotypical person, an autistic person, a talking dolphin or an intelligent machine. For the atheists in the audience, this may be an easier sell, but the point actually is still the same: something with a truly universal intellect could evaluate a system of beliefs that it was presented, and with a selfregulatory will decide whether or not it was going to agree and/or follow that system of beliefs.

Thinking out loud here...

-the Centaur

Labels: Intelligence

Comments:

I'm actually working on an article now about when AIs should be treated ethically, and for me the core of it is their ability to feel pain, sadness, pleasure, and joy. It sounds as though you're assuming animals can feel these things, and perhaps you're right, but if a being cannot feel any pain, suffering, or dissatisfaction whatsoever, it's hard to justify the need to treat them ethically, including giving them freedom or respect.

As a thought experiment, does Commander Data (in emotionless mode) need to be treated with respect? What's the harm in NOT doing so?

Without the emotional and sensation angle we'll be in danger soon of requiring treating things like the agents in panicking crowd simulations with respect. http://www.youtube.com/watch?v=T5VZFxRJ6ss

Wednesday, May 07, 2008

I've heard memory is unreliable but this takes the cake...

Eyewitness Memory is Unreliable

Australian eyewitness expert Donald Thomson appeared on a live TV discussion about the unreliability of eyewitness memory. He was later arrested, placed in a lineup and identified by a victim as the man who had raped her. The police charged Thomson although the rape had occurred at the time he was on TV. They dismissed his alibi that he was in plain view of a TV audience and in the company of the other discussants, including an assistant commissioner of police. The policeman taking his statement sneered, "Yes, I suppose you've got Jesus Christ, and the Queen of England, too." Eventually, the investigators discovered that the rapist had attacked the woman as she was watching TV - the very program on which Thompson had appeared. Authorities eventually cleared Thomson. The woman had confused her rapist's face with the face the she had seen on TV. (quote taken from Baddeley's Your Memory: A User's Guide).

It simply staggers my imagination that someone testifying about unreliable eyewitnesses would then get accused of something by an unreliable witness ... who herself had been watching him talk about unreliable witnesses and got confused!

-Anthony

Labels: Intelligence

Comments:

http://www.youtube.com/watch?v=Ahg6qcgoay4

Artificial Intelligence, Briefly

Who am I? What do I do? Why?

I am an artificial intelligence researcher.

I study human and other minds to aid in the design of intelligent machines and emotional robots.

I believe emotions are particularly important for robots because, unlike intelligent machines which normally run as computational processes on a general computer maintained by some other agent, robots have physical bodies with physical needs that they themselves are in part responsible for - and an emotional system's functions are to evaluate how our current situation meets our needs, to trigger quick reactions to get us out of harm, and to motivate us to pursue long-term actions to improve our lot.

I pursue artificial intelligence because right here, right now its techniques help me construct better software artifacts and deepen my understanding of the human condition, and because I hope that creating human level intelligence and beyond will improve the lot of human kind and further the progress of sentient life.

I think these things often, but I never say them. Time to change that.

-Anthony

Labels: Intelligence

Comments:

Saturday, September 22, 2007

The Cloning Machine Has Gone Wild

Actually,this is from an article on Mixing Memory about how you can get an illusion analogous to the Thatcher illusion with negatives.

Again, these two pairs of faces are the same, except the top two are negatives. The one on the top-left (a) is the pure negative, which Antis describes as being "analogous to an upside-down face." The one on the top-right (b) is negative except for the eyes and teeth, which are positive. This is analogous to the "Thatcherized" face (the one with the inverted mouth and eyes). The bottom two faces were created by reversing the contrast of the top two faces. Thus the bottom-left face (c) is normal, and the bottom-right face (d) is normal except for negative teeth and yes. Now the contrast between the positive face with two negative features makes for a hideous, zombie-like ex-PM (I keep waiting for lightning bolts to come out of his eyes), not unlike the upright Thatcherized face in its grotesqueness. And that's the Tony Blair illusion.

The original Margaret Thatcher illusion is just as startling:

Look at the image below. You will notice some little differences, but they hardly trigger your brain to notice them... but wait! If we flip this same image, you will see the differences are anything, but "unnoticable"!

I'm told the judges would also have accepted "Proof Tony Blair is a Vampire" or "Famous British Politicains Get Possessed" as titles for this post.

-the Centaur

Labels: Intelligence

Comments:

Sunday, September 10, 2006

Large-Scale Semantic Networks

One of the most interesting papers I've encountered so far was The Structure and Function of Complex Networks, a survey of mathematical and empirical studies of networks that I had wish had been available when I was doing my thesis work. The most important result I think to come out of recent graph theory is that simple uniform and random models of networks don't tell the whole story - now, we have new mathematical tools for modeling a wide range of graph architectures, such as:

- Small World Networks. A traditional graph model says nothing about how far you may need to travel to find an arbitrary node in the graph. Think of square tiles on a floor - each tile is connected to four others, but the number of steps needed to reach any tile is a function of the distance. But anyone who's played the parlor game "Six Degrees of Kevin Bacon" knows that real-world human relationships aren't arranged this way: everyone is connected to everyone else in the world by a very short chain of relationships - on average, you can reach almost anyone in the world in only six or seven handshakes. This shows up in graph analysis as the "mean geodesic distance" between two nodes in a graph, and is an important property we should measure about our networks as they grow to determine what kind of network structure we are really dealing with. At the very least, a random graph structure shows small-world properties more similar to real-world graphs than uniform graphs, and we should consider using them over uniform models as a basis for analyses.

- Scale-Free Networks. Both uniform networks - where every node has the same link structure - and random networks - where nodes are connected at random to each other across the graph - have a definite scale or rough average size. Nodes in a random graph are like people: each one is unique, but their heights are distributed over a defnite scale so there are few people shorter than three feet and no people taller than nine. Real world networks don't have this definite scale: instead, they look the "same" no matter what size scale you're looking at. Nodes in a real world graph are like the distribution of city sizes: for every city there are four times as many cities at half that size. This shows up technically in the "degree distribution": the statistical pattern of the number of links on each node. This will no doubt have significant effects on processes operating over networks like spreading activation.

There are more issues in graph theory than I can readily summarize, including issues like resilience to deletion, models of growth, and so on; many of which are directly relevant to studies of semantic networks and processes that operate over them.

Another paper, The Large-Scale Structure of Semantic Networks, applies these techniques to real-world semantic network models drawn from sources such as WordNet and Roget's Thesaurus. This paper, like the survey article Graph Theoretic Modeling of Large Scale Semantic Networks, seems to find that real semantic networks have scale-free, small-world properties that aren't found in the simpler mathematical models that people such as Francis (cough) used in his thesis.

SO, anyone interested in semantic networks or spreading activation as a tool for modeling human cognition or as a representation scheme for an intelligent system would do well to follow up on these references and begin an analysis of their systems based on these "new" techniques (many of which have been around for a while, but sadly hadn't reached everyone in the semantic network community (or, at least, hadn't reached me) until more recently.

So check them out!

-the Centaur

Labels: Intelligence

By day, Anthony Francis makes computers smarter; by night he writes science fiction and draws comic books. He lives in San Jose with his wife and cats but his heart will always belong in Atlanta.

By day, Anthony Francis makes computers smarter; by night he writes science fiction and draws comic books. He lives in San Jose with his wife and cats but his heart will always belong in Atlanta.

Comments: