Robot navigation is the problem of making robots go where we want them to. My research focuses on deep reinforcement learning for indoor robot navigation – making robots the size of people go where we want them to in spaces the size of buildings, using techniques vaguely inspired by the neural architecture of human cognition, but optimized for the kinds of computers we have. It’s fun!

My signature research areas with my great colleagues at Google Brain Robotics are:

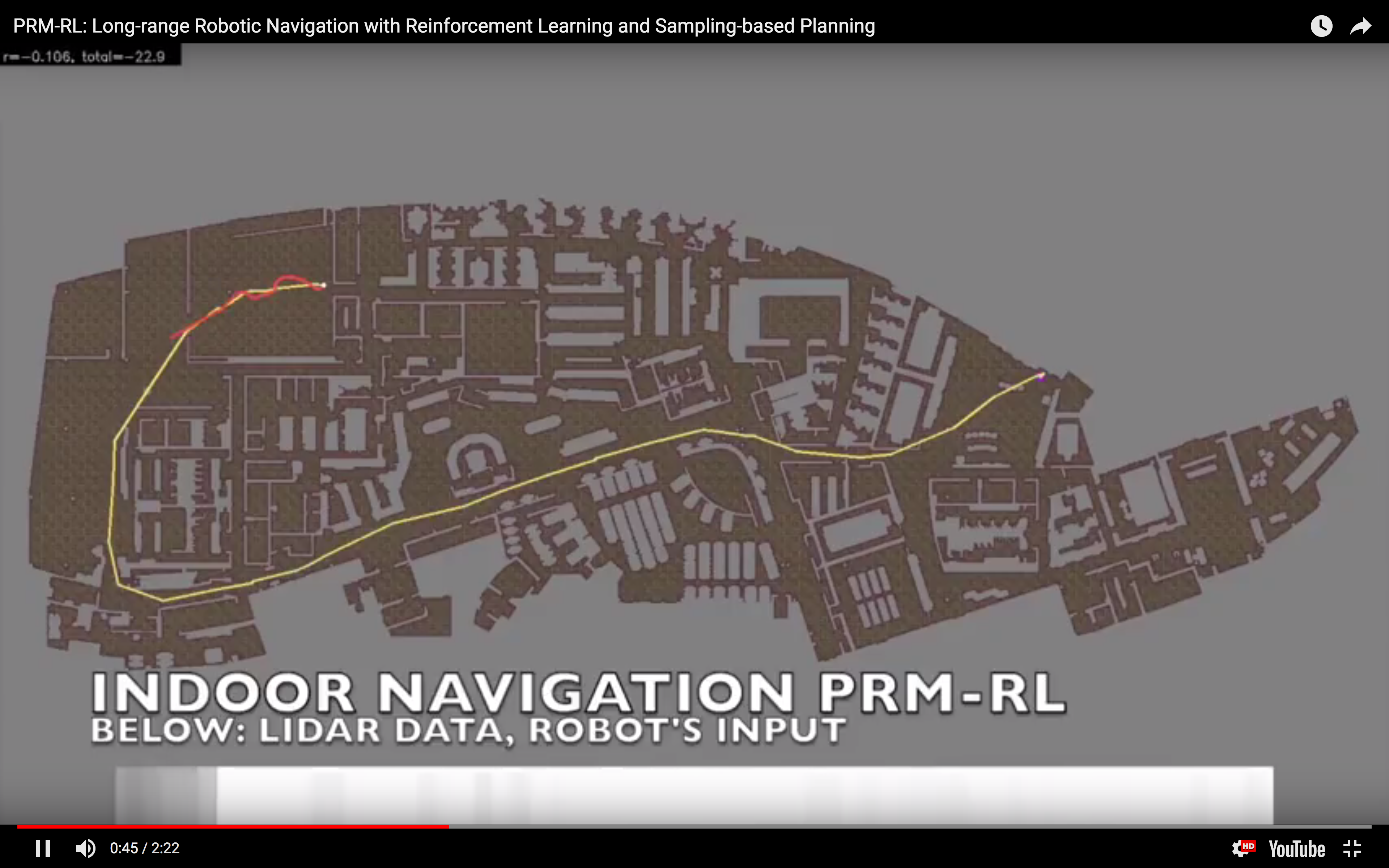

- PRM-RL: This is our award-winning work on improving robot navigation by combining the best of deep reinforcement learning with sampling-based planning. This is a hybrid navigation architecture: we use deep reinforcement learning (RL) to learn point-to-point navigation policies, then guide them with probabilistic roadmaps (PRMs) which build a graph of navigable space in a map; the key innovation in this work, proposed by our team lead Sandra Faust, was to keep only the links in the roadmap that the RL agent could navigate in simulation. This paper won a Best Paper award at ICRA 2019.

https://arxiv.org/abs/1710.03937 - AutoRL: This is our technique to make deep reinforcement learning better and more reliable by tuning it with evolutionary hyperparameter optimization. This is another hybrid technique, in which we parameterize reward functions and network architectures and use evolutionary optimization to select the rewards and network architectures that train the best reinforcement learning agents. This leads to better policies and more stable training.

https://arxiv.org/abs/1809.10124

You can read more about this in the Google AI blogpost, Long-Range Robotic Navigation via Automated Reinforcement Learning, a survey of our team’s navigation and reinforcement learning efforts written by my manager Sandra Faust and myself, or in the New York Times article Inside Google’s Rebooted Robotics Program, which reviews not just our work but the broader Robotics at Google effort.